Pi & self-driving cars

Photo: Roberto Nickson / Unsplash.com

Inattentive drivers, children running down the street and e-bikers going way too fast: driving a car is dangerous if you are not careful behind the wheel. Yet there are many companies such as Google, Aurora and Tesla that have been developing a self-driving car for some time now. But how does that actually work? How is it possible that a car itself can keep an eye on the road?

A self-driving car from Uber with a driver behind the wheel for any interventions. Source: The New York Times.

To participate safely in traffic, a self-driving car must be able to see as well as a normal driver. And, if possible, even better. In the picture above you can see all kinds of measuring equipment on top of the car. Part of it consists of cameras that are cleverly positioned to create a 360 view of the environment. These cameras can view the surroundings in high definition. In addition, the images contain colors, so that traffic signs are read correctly. There is only one drawback to these cameras. With cameras alone, the car cannot see depth. In other words, the car cannot yet, like us, see in 3D.

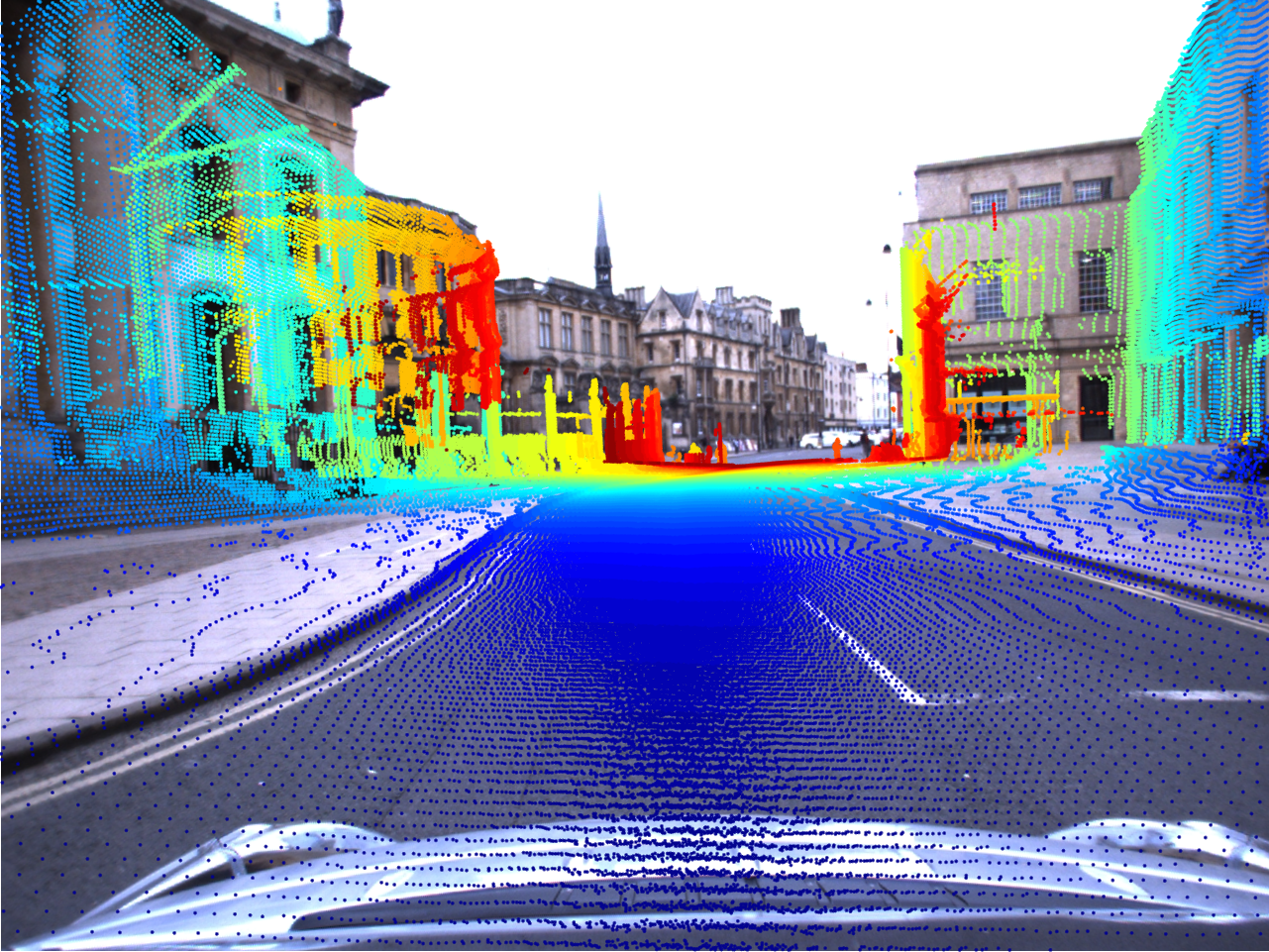

A 3D point cloud of a road and its surroundings next to the road. Source: TowardsDataScience.

To solve this problem, there is something else on the roof of the car: Lidar. Lidar technology allows self-driving cars to convert their surroundings into a 3D point cloud as you can see above. Lidar sensors shoot invisible laser beams at objects in the surroundings. The objects bounce the laser beams back to the sensors and in this way the car calculates the distance to the object. The animation below shows this process nicely. Lidar thus adds depth to the surroundings.

An animation of Lidar technology on self-driving cars. Source: Waymo / The Unlockr.

So the advantage of Lidar is depth and a 3D view. The advantage of normal cameras is color and the quality of the image in 2D. The self-driving car wants to combine these two advantages. To do this, it needs to merge the 3D images with the 2D images. In this way, the car sees the surroundings in high quality and at the same time it can estimate how far away each point in the surroundings is away from the car.

Lidar points combined with camera images. Source: University of Oxford..

Combining camera images and Lidar points looks like the picture above. Each object captured by the camera also exists in the 3D point cloud obtained by Lidar. The car must now ensure that the camera images and the 3D points are correctly merged into each other. Since Lidar and the cameras observe the entire surroundings in a 360-view, this is done using a circle and angles. Simply put, an angle is assigned to each 2D point of the camera and each 3D point of the Lidar. If a cyclist is standing exactly in front of the car, these points receive an angle of 0°. If the cyclist is standing exactly at the back of the car, the points receive an angle of 180°.

You can think of the angles as labels. Each point now has a label (angle) and by matching the labels you can now combine the 2D camera images with the 3D Lidar points. So where does the constant π jumps in? In general, angles are expressed in degrees. In science and engineering, angles are more often expressed in radians. The constant π occurs regularly when using radians.

An angle of 360° now equals 2π radians and an angle of 180° equals π radians etc. The merging of the 3D point clouds into the 2D camera images is thus done by using angles expressed in π. Because radians are often used in science, we see π popping up in all kinds of applications that involve angles. And therefore also in self-driving cars!